Geomatics Algorithms for Target Localization

By Avionics Team | November 25, 2024

As part of our SUAS 2025 mission planning, the Geomatics team is developing a custom localization algorithm to convert visual detections into accurate GPS coordinates. This system is designed to work in conjunction with our onboard imaging platform and computer vision pipeline.

Captured images from the UAV’s camera are first geotagged based on onboard GPS and IMU data. These images are transmitted to the Ground Control Station (GCS), where a YOLOv11-based detection model, deployed in a Docker container with GPU acceleration, identifies and classifies visible targets within the drop zone.

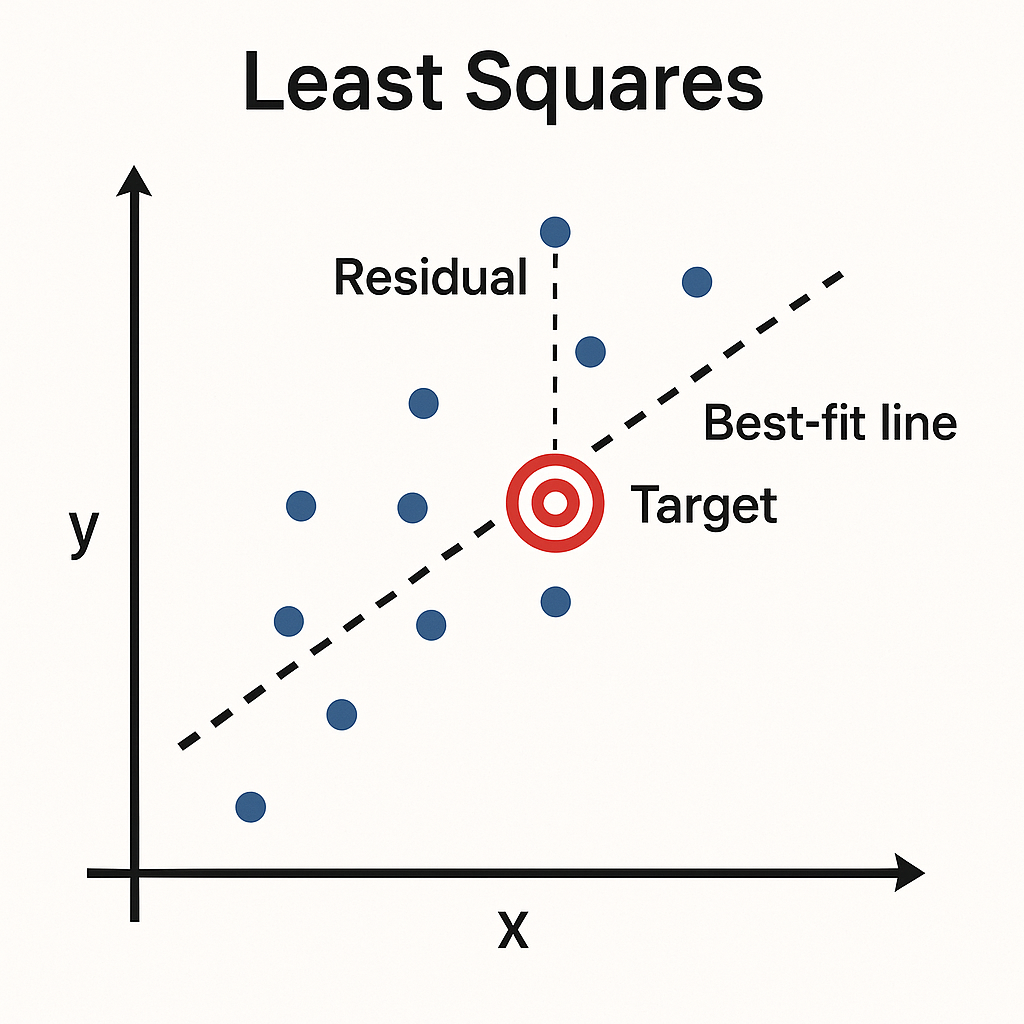

Each detected object is assigned a unique class ID. All detections within the same class are passed to a custom adjustment model that estimates final geocoordinates. This model is based on a Least Squares Regression (LSR) technique, optimized for UAV-based imaging applications.

Target Localization Algorithm:

- Iterative Adjustment: The regression is performed using a non-linear iterative approach to improve accuracy with each cycle.

- Dynamic Convergence Threshold: Convergence is determined by a dynamically calculated threshold based on the nominal deviation in observed detections.

- Ranging Equation Calibration: The model uses a modified form of the ranging observation equation. It accounts for both internal camera properties (focal length, resolution, pixel dimensions) and external UAV geometry (lever arm offsets and attitude).

Once converged, the algorithm produces corrected latitude and longitude values for each target. These are returned to the mission computer and uploaded to Mission Planner as part of the autonomous payload drop routine.

By refining geolocation accuracy through post-processing adjustments, we’re able to preserve hardware simplicity while meeting SUAS precision requirements. Our model is designed to be lightweight and integrable with minimal runtime overhead, ensuring it operates effectively on our Raspberry Pi 5 mission computer alongside other flight-critical tasks.